There are many reasons why we might want to retrieve data from the internet, particularly as many academic research resources are becoming increasingly available online.

Examples include gene sequence databases for a range of organism (flybase, fishbase FIND URLS), image databases for computer vision benchmarking and/or training machine learning based systems (URLS), analyzing academic publication databases such as the ArXiV preprint server (URL), to name but a few.

Python makes retrieving online data relatively simple, even when just using the Standard Library! In fact, we did just that for one of the introductory exercises, though you were instructed to “blindly” copy and paste the relevant (two!) lines of code:

import urllib.request

text = urllib.request.urlopen("http://www.textfiles.com/etext/AUTHORS/SHAKESPEARE/shakespeare-romeo-48.txt").read().decode('utf8')

The Standard library module in question, urllib,

contains a submodule request with the

urlopen function that returns a file-like

object (i.e. that has a read member function)

which we can use to access the web resource, which

in the case of the exercise was a remotely hosted

text file.

Servers have the ability to deny access to their resources to web robots i.e. programs that scour the internet for data, such as spiders/web crawlers. One way of doing this is to the robots.txt file located at the url.

For the purpose of our academic exercise, we’re going to identify ourselves as web browsers, such that we gain access to such resources.

To do so, we need to set the user-agent associated with our request, as follows:

import urllib.request

req = urllib.request.Request(

url,

data=None,

headers={

"User-Agent": "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36"

}

)

text = urllib.request.urlopen(req).read().decode("utf-8")

When interfacing with web-pages, i.e. HTML documents, things get a little more tricky as the raw data contains information about the web-page layout, design, interactive (Javascript) libraries, and more, which make understanding the page source more difficult.

Fortunately there are Python modules for “parsing” such information into helpful structures.

Here we will use the lxml module.

To turn the difficult to understand text into a structured object, we can use

from lxml import html

import urllib.request

url =

text = urllib.request.urlopen(url).read().decode('utf8')

htmltree = html.fromstring(text)

The htmltree variable holds a tree structure that represents the web-page.

Background

For more information about the node structure of HTML documents, see e.g. http://www.w3schools.com/js/js_htmldom_navigation.asp

For more general information about HTML see https://en.wikipedia.org/wiki/HTML

Now the the raw text has been parsed into a tree, we can query the tree.

To find elements in the html tree, there are several mechanisms we

can use with lxml.

CSS selector expressions are applied using cssselect and correspond to

the part of the CSS that determines which elements the subsequent

style specificiations are applied to, in a web page’s CSS (style) file.

For example, to extract all divs, we would use

divs = htmltree.cssselect("div")

or to get all hyperlinks on a web page,

hrefs = [a.attrib('href') for a in htmltree.cssselect("a")]

XPath (from XML Path Language) is a language for selecting nodes from an XML tree.

A simple example to retrieve all hyperlinks (as above) would be:

hrefs = htmltree.xpath("//a/@href")

A quick and simple approach to determining a specific xpath expression for a given element, you can inspect the corresponding element using e.g. Google Chrome’s inspect option when right clicking on a web-page element.

In the element view, right click and select Copy > Copy XPath.

More information about the XPath syntax can be found here: https://en.wikipedia.org/wiki/XPath

Write a script that

Important notes

Use the functions

urllib.request.Request( ... ) to set the User Agenturllib.request.urlopen(request).read().decode('utf8') to pull the texthtml.fromstring( ... ) to create the element tree[ELEMENTTREEOBJECT].xpath or [ELEMENTTREEOBJECT].cssselect to select the appropriate elements.

from lxml import html

import urllib.request

import matplotlib.pyplot as plt

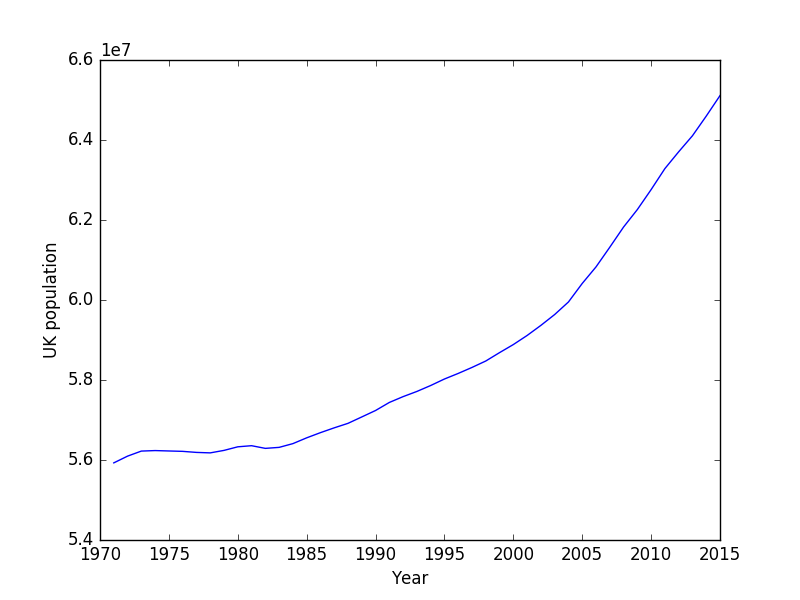

url = "https://www.ons.gov.uk/peoplepopulationandcommunity/populationandmigration/populationestimates/timeseries/ukpop/pop"

req = urllib.request.Request(

url,

data=None,

headers={

"User-Agent": "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/41.0.2228.0 Safari/537.36"

}

)

text = urllib.request.urlopen(req).read().decode("utf-8")

htmltree = html.fromstring(text)

data = htmltree.xpath("//td/text()")

year = []

pop = []

for i in range(0, len(data), 2):

year.append(int(data[i]))

pop.append(int(data[i+1]))

plt.figure()

plt.plot(year, pop)

plt.xlabel("Year")

plt.ylabel("UK population")

plt.savefig("uk_population.png")

produces

Very simple data passing to the server is often done in the form of URL parameters.

Whenever you have seen a url that looks like, e.g.

https://www.google.co.uk/webhp?ie=UTF-8#q=python

(feel free to click on the link!) you are passing information to the server.

In this case the information is the form of two variables, ie=UTF-8 and

q=python. The first is presumably inforation about the encoding that my

browser requested, and the second is the query term I had asked for, in

this case from Google.

Simple URL arguments follow an initial question mark, ?, and are ampersand (&) separated key=value pairs.

Google uses a fragment identifier, which starts at the hash symbol #

for the actual query part of the request, q=python.

For such websites, passing data to the server is a relatively simple task of determining which query parameters the site takes, and then encoding your own.

For example if we create a list of search terms

terms = [

"python image processing",

"python web scraping",

"python data analysis",

"python graphical user interface",

"python web applications",

"python ploting",

"python numerical analysis",

"python generators",

]

we could generate valid search URLs using

baseurl = "https://www.google.co.uk/webhp?ie=UTF-8#q="

urls = [ baseurl + t.replace(" ", "+") for t in terms ]

for url in urls:

# Perform request, parse the data, etc

Here we simply determined the “base” part of the URL and then added on the search terms, replacing the human readable spaces with the “+” seperators.

urllib also provides a function to perform this parameter encoding for us,

data = {'name' : 'Joe Bloggs', 'age': 42 }

url_values = urllib.parse.urlencode(data)

print(url_values)

generates

age=42&name=Joe+Bloggs

For complicated encodings, this is certainly better than manually generating the parameter string.

So far we have been dealing with what are known as GET requests.

GET was the first method used by the HTTP protocol (i.e. the Web).

Nowadays there are several more methods that can be used:

Most of these are relatively technical methods; the POST method, hoewever, is in widespread usage as the standard mechanism to send data to the server.

POST requests are commonly associated with forms on web pages. Whenever a form is submitted, a POST request is made at a specific URL, which then elicits a response.

Many web-based resources use forms to retrieve data.

Luckily for us, making a POST request is relatively straight forward with Python; the tricky part is usually determining the POST parameters.

For example, to POST data to Exeter Universities phone directory (http://www.exeter.ac.uk/phone/) we could use

import urllib.request

import urllib.parse

url = "http://www.exeter.ac.uk/phone/results.php"

data = urllib.parse.urlencode({"page":"1", "fuzzy":"0", "search":"Jeremy Metz"}).encode("utf-8")

req = urllib.request.Request( url, data=data )

text = urllib.request.urlopen(req).read().decode("utf-8")

urlliburllib comes with Python and is relatively straight forward to use.

There are however alternative libraries that achieve the same tasks, such

as request, which aims to be a simple alternative to urllib.

For full web crawling tasks, scrapy is a popular web spider/crawler library,

but is beyond the scope of this section.

Many modern websites use Javascript to generate generate content dynamically.

This makes things trickier when we try to scrape data from such pages, as the raw page text doesn’t contain the data we want!

Ultimately, we will need to run the Javascript to generate the data we want, and there are several options for doing so, including

Webkit, mentioned above, is the layout engine component used in many modern browsers like Safari, Google Chrome, and Opera (Firefox, on the other hand uses an equivalent engine called Gecko).

As an example, the site http://pycoders.com/archive/ contains a javascript generated list of archives.

Using PyQt5 we can pull this list using

import sys

from PyQt5.QtWidgets import QApplication

from PyQt5.QtCore import QUrl

from PyQt5.QtWebKitWidgets import QWebPage

from lxml import html

#Take this class for granted.Just use result of rendering.

class Render(QWebPage):

def __init__(self, url):

self.app = QApplication(sys.argv)

QWebPage.__init__(self)

self.loadFinished.connect(self._loadFinished)

self.mainFrame().load(QUrl(url))

self.app.exec_()

def _loadFinished(self, result):

self.frame = self.mainFrame()

self.app.quit()

url = 'http://pycoders.com/archive/'

r = Render(url)

result = r.frame.toHtml()

htmltree = html.fromstring(result)

archive_links = htmltree.xpath('//div[2]/div[2]/div/div[@class="campaign"]/a/@href')

print(archive_links)